About the ECF

Through the Emerging Challenges Fund, we offer anyone the opportunity to contribute to a pooled, thesis-driven fund that our expert grantmaking teams will direct to outstanding organizations where additional funding can quickly make a major difference.

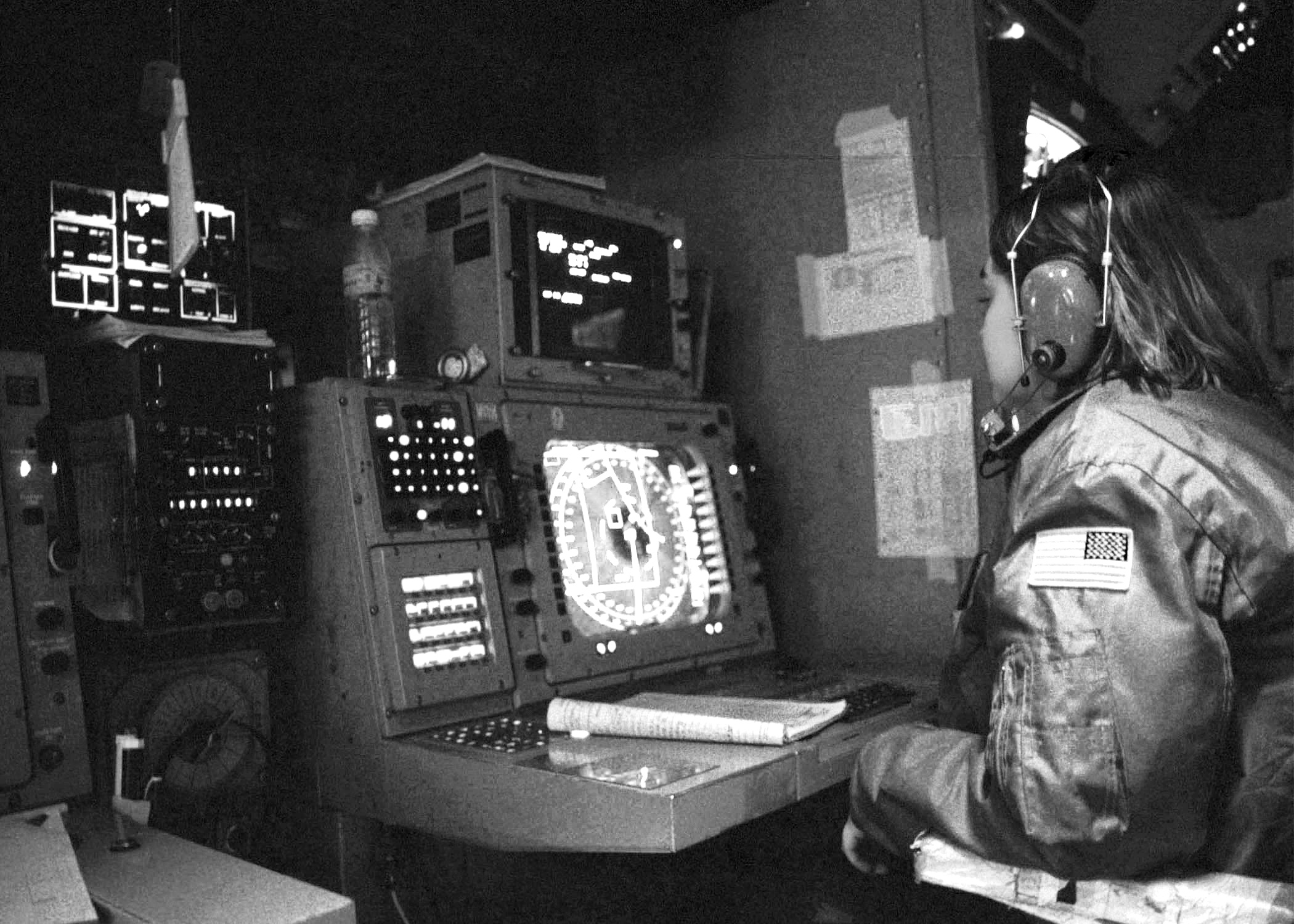

Over the next decade, emerging technologies will pose significant challenges to global security. Rapid advances in artificial intelligence could create advanced AI systems with goals that diverge from human interests and grant authoritarians unprecedented means of control. We face rising nuclear and biological risks as advances accelerate and automate elements of nuclear decision-making and lower the barriers for malicious actors to execute large-scale biological attacks.

We aim to prepare the world for these challenges. In selecting projects, the ECF considers Longview’s usual grantmaking criteria and two further tests:

- Does the project have a legible theory of impact? ECF grantees must have a compelling, transparent, and public case for how their activities will have an impact that appeals to a wide range of donors.

- Will the project benefit from diverse funding? Policy organizations sometimes benefit from the support of the ECF’s 2000+ donors when demonstrating their independence from major funders and industry actors. ECF grantees often, though not always, pass this test.

In 2025, ECF donors supported organizations advancing both policy and research. On the policy side, grantees worked to shape frontier AI governance in the US and Europe, including by building government capacity through talent pipelines and facilitating discussions on AI and arms control between the US and China. On the research side, we funded groups evaluating AI system capabilities, their potential misuse by malicious actors, and the broader societal implications of rapid AI progress.

For those seeking to invest in a safer future, this fund provides unique expertise across beneficial AI, biosecurity, and nuclear weapons policy and fills critical funding gaps at organisations in need of rapid financial support and a diversity of donors.

For major donors

Longview’s focus—and the source of most of our impact—is helping major donors give.

- Give to our private funds. For donors giving over $100K, we offer access to our private frontier AI, digital sentience, and nuclear weapons policy funds. Our private fund reports are sent directly to donors rather than distributed publicly, allowing us to use those funds to support confidential, risky, or large-scale projects.

- Get bespoke advice. For major donors seeking to develop significant philanthropic portfolios, we provide a personalized end-to-end service at no cost. This includes detailed analysis, expert-led learning series, residential summits, tailored strategic planning, grant recommendations, due diligence, and impact assessment.

Please get in touch with our CEO, Simran Dhaliwal, at simran@longview.org.